POSTS

Learning for the PhD in the age of GenAI

by Luis P. Prieto, - 13 minutes read - 2729 wordsGenerative artificial intelligence (GenAI) tools like ChatGPT have been hailed as both a revolutionary resource and an apocalypse for education. Among graduate and doctoral students around me—and in my own everyday learning as a researcher—I see both futures playing out. How to use these tools to support learning as a budding researcher, rather than de-skill ourselves? This post reminds us that the doctoral journey is a learning journey and highlights a key aspect of any learning process, along with different ways we can engage in it. Then, I draw on research-based ideas to outline (tentative) principles for learning effectively with (or without) these AI tools.

I’m about to start teaching a course on research methodology at the master-level, which is also taken by many doctoral students. Like many other university teachers, I’ve noticed a troubling trend: more shallow engagement with materials, assignments that appear AI-generated1, and students less articulate when asked orally about course concepts. While there is rarely definitive proof, it seems that many learners (including doctoral students) are doing worse as a result of using generative AI (GenAI). It is still too early to say (people started using ChatGPT massively only in 2023), but from what I see in recent media (e.g., here, here, here, here) and in educational research (e.g., here, here, here), I’m not alone in noticing these questionable impacts of (mis)using GenAI for learning. A common realization across these sources: when people use AI in learning, sometimes the outputs improve (this is what most positive GenAI-Ed research measures2)… but the learners get worse, in skills, knowledge, or “metacognitive laziness,” especially in orienting themselves to tasks and evaluating their own work.3

Why is this relevant for the readers of this blog (mostly, PhD students)? Albeit many consider the PhD “just a job,” the word “student” is there for a reason: the PhD (and research in general) is fundamentally about learning: mastering the literature of a narrow field, acquiring research skills to investigate further, proposing something novel, and then using those skills to evaluate the value of our proposal. The PhD is learning a subject without a set curriculum or pedagogy, having to create both and develop the meta-skills to learn along the way. This is why many find the PhD difficult, and why we must be cautious about GenAI’s potential dangers for learning. The more I look at doctoral students around me, and at the papers I read (and write) in my research work, the more urgent this topic sems.

This post gathers initial ideas about how to approach learning (for the PhD and in general) in a world where GenAI tools are widely available, easy to use, and tempting us with the promise of better outputs—at an uncertain price for our (metacognitive and otherwise) skills.

All the learning sciences in a nutshell

Let’s start from first principles. Setting GenAI tools aside, what is the best way to learn? I’m not a pedagogist or learning scientist (though I’ve worked with many), but if we are talking about cognitive learning (not physical skills), it’s basically about active, hard mental engagement with the topic, concepts, or skill we want to learn. In a sense, all of pedagogy and education (from “deliberate practice” to “active learning," “inquiry-based learning”… or even later attempts to support learning with GenAI) is about keeping learners interested, paying attention, and working actively. This can mean structuring activities so tasks are feasible (not demotivatingly hard) or organizing groups to collaborate on a learning task (thus engaging our social instincts and forcing us to actively explain our understanding to others).

Thus, feeling like we are making a big effort (on the right things, not extraneous load) when learning is, as programmers say, “not a bug, but a feature.” If it feels pleasurable and easy, probably we are not learning that much.

A slightly more complex view

If we go one level deeper into what “active, hard engagement” means, a few widely used educational frameworks can help:

- The ICAP framework4 classifies engagement with learning materials: passive (receiving information), active (motoric actions like highlighting or taking notes), constructive (producing new outputs), and interactive (dialogue with someone to build on each other’s ideas).

- Bloom’s (revised) taxonomy categorizes cognitive processes, roughly in order of increasing complexity: remembering (facts and concepts), understanding (explaining in one’s own words), applying (using information in new situations), analyzing (decomposing or connecting ideas), evaluating (critiquing or justifying), and creating (producing new, original artifacts).

We can use one or both frameworks to analyze how we are learning. In both, the goal is to reach higher levels of engagement and complexity (often called “higher-order thinking”). This usually means activities of increasing complexity, though sometimes starting with creating or interacting can keep things interesting (hence, motivating). In any case, the more diverse our engagement and cognitive processing, the deeper our understanding will become.5

11 rules guidelines heuristics tips on learning for the PhD in the age of GenAI

Now that we know a bit about what matters in learning and the kinds of activities we can engage in, here are some ideas on how we can better use (or avoid using) GenAI tools for learning in the PhD (and elsewhere):

- Focus on the process, not (just) outcome. We often think only about the deliverable asked of us (the paper, essay, data analysis…) and how to get there fast (e.g., asking ChatGPT to write it). Most ineffective ways of using GenAI for learning are exclusively output-focused6. Instead, we should focus on the steps needed: reading (and deciding which sources), brainstorming, summarizing, synthesizing in our own words. Make an explicit “learning plan” (even a simple bullet list). Bonus points if it also includes the time each step will take. Don’t just fire up the chatbot and ask for the answer (which is fine for random facts and curiosities, but not for things we want to understand deeply).

- Engage varied cognitive processes. Our “learning plan” should include steps at different levels of the frameworks above, reaching higher complexity at some point. Having GenAI summarize a paper is fine sometimes, but it turns a constructive task into a passive one. Instead, use GenAI for higher-order activities: asking it to critique our ideas, impersonating someone with opposing views, or arguing with us. For instance, Mollick and others have compiled a list of such GenAI learning activities with prompts.

- Try yourself first. Once we see learning as process, not product, it’s clear that shortcuts with GenAI eliminate our practicing skills like summarizing, connecting concepts, clarifying thoughts, generating ideas… and over time, we lose those skills (you know, “use it or lose it”). By learning “the easy way,” we risk unlearning skills we already had! To counter this, we should try the higher-order task ourselves first before asking the AI. We may be surprised to find that many AI-generated ideas are actually weaker than our own.

- Be transparent about AI use. Thinking of learning as process also means we should document that process, so we can track our progress, know which ideas were ours, reproduce our own work, or report it transparently if needed. Researchers already do this in lab notebooks or papers’ methods sections. Extending reproducibility to our learning is natural, and good practice for us as researchers. We often tell master students to keep “methodological notes”—bullet points of steps taken, links to partial documents or chatbot conversations. Adding time estimates also helps with time management.

- Create your own knowledge representations (without AI). GenAI tools are powerful at producing summaries or diagrams of complex texts and materials. But synthesizing and relating ideas across sources is a core research skill. This is perhaps the most important skill we want to preserve. So we should create our own knowledge representations first (see #3 above), and only then refine them with AI. Of course, we can adjust depending on the importance of the concept: it’s fine to use AI to summarize peripheral ideas or sources, but be wary with central ones (unless stress-testing your own ideas, see point #8 below).

- Never just copy-paste GenAI outputs. Avoiding product-orientation means never just submitting GenAI outputs as our own. Besides being unethical (it’s plagiarism), it wastes a learning opportunity. Instead, we should read critically, evaluate, edit, and make the AI-generated text ours—and then report AI use (#4). This way we practice important skills rather than losing them.

- Don’t be in a hurry. A major cause of GenAI misuse (and much unethical behavior7) is time pressure. When rushed, we act less ethically and think less deeply. Thus, a simple trick to use GenAI better is to not do things in a hurry: never wait until the last minute for assignments or cram too many learning activities in small timespans. We’ll feel less tempted to just ask the chatbot, and slower reflection usually leads to deeper learning (as long as we are not bored out of our minds 😊).

- Don’t be biased, be dialectic. GenAI tools tend to be sycophantic8, agreeing with our views—even if they’re factually wrong. This is toxic for researchers striving to confront bias. It can also be dangerous for mental health. We should beware of “memory” features in these tools, which can reinforce our views. To counter this, we can ask AI to explicitly criticize our work, or impersonate an expert with opposing views. Multiple conversations (with AIs or humans) from different perspectives can lead us to a deeper, dialectic understanding (the classic dialectic of thesis-antithesis-synthesis). Starting a new chat helps avoid contamination/biasing from earlier interactions.

- Adapt it to your interests, then don’t. GenAI tools excel at translating ideas between fields, making metaphors, and simplifying. We should use this to connect dry or complex ideas with our own interests. But beware: these metaphors may be inaccurate—plausible on the surface but flawed on inspection. After some of this “translation,” we should return to making our own knowledge representations (#5) and test them dialectically with an AI critic (#8), or better, with a colleague or more knowledgeable person’s feedback.

- Know your prompting (just enough). GenAI tools are probabilistic, so outputs are not reproducible, and it is hard to predict whether the tool will do a good job at a certain task. Prompt quality (i.e., the exact way we give instructions to the AI) affect this a lot. Providing context and clear instructions often improves results. Though this may be less of an issue now, basics of prompt engineering remain useful—at least for complex tasks. One simple but effective introduction to prompting is this post. Writing a careful prompt has another benefit: it forces us to think through what we’re asking, what steps are needed, what skills we’re off-loading, and what output we want (i.e., it supports process-oriented learning, see #1).

- Always ask, ‘what will I be losing’? Over time, we can develop an instinct for “learning with AI.” Each time we prompt a chatbot, we should reflect: what skill or knowledge might I lose if I repeat this offloading? The goal isn’t to never use GenAI. If the task involves skills we lack or don’t value (say, filling reporting spreadsheets), then the tool may help us align with our values. Otherwise, think of the workout metaphor: using a GenAI chatbot in a purely product-oriented way can be like lifting weights with an exoskeleton. The weight is lifted, but are our muscles growing? We may become expert exoskeleton-drivers, but how functional will we be when the cybernetic help is not there?9

Addendum: Creativity (for the PhD) in the age of GenAI

The PhD (and research in general) is a deeply creative endeavor. While creating novel ideas or solutions is not exactly learning, many of the principles above also apply to creativity. If we see it as an open-ended skill we never fully master, creativity is a crucial skill for researchers. Yet GenAI tools seem to outpace us at idea generation: we can ask one for 100 research questions in our field, and it will deliver in seconds. The dangers of off-loading creativity (and the many other micro-skills involved in any complex task we do as researchers) to a tool, are serious.

The answer is not simple: not all ideas are equally valid or diverse, and humans still seem better at assessing this and applying contextual knowledge than GenAI tools. So how do we avoid losing our creativity to the machine? As others have noted, we should apply principle #3 ruthlessly: try yourself first. Draft yourself first, generate ideas yourself first, talk with others first—then, if you wish, improve with AI.

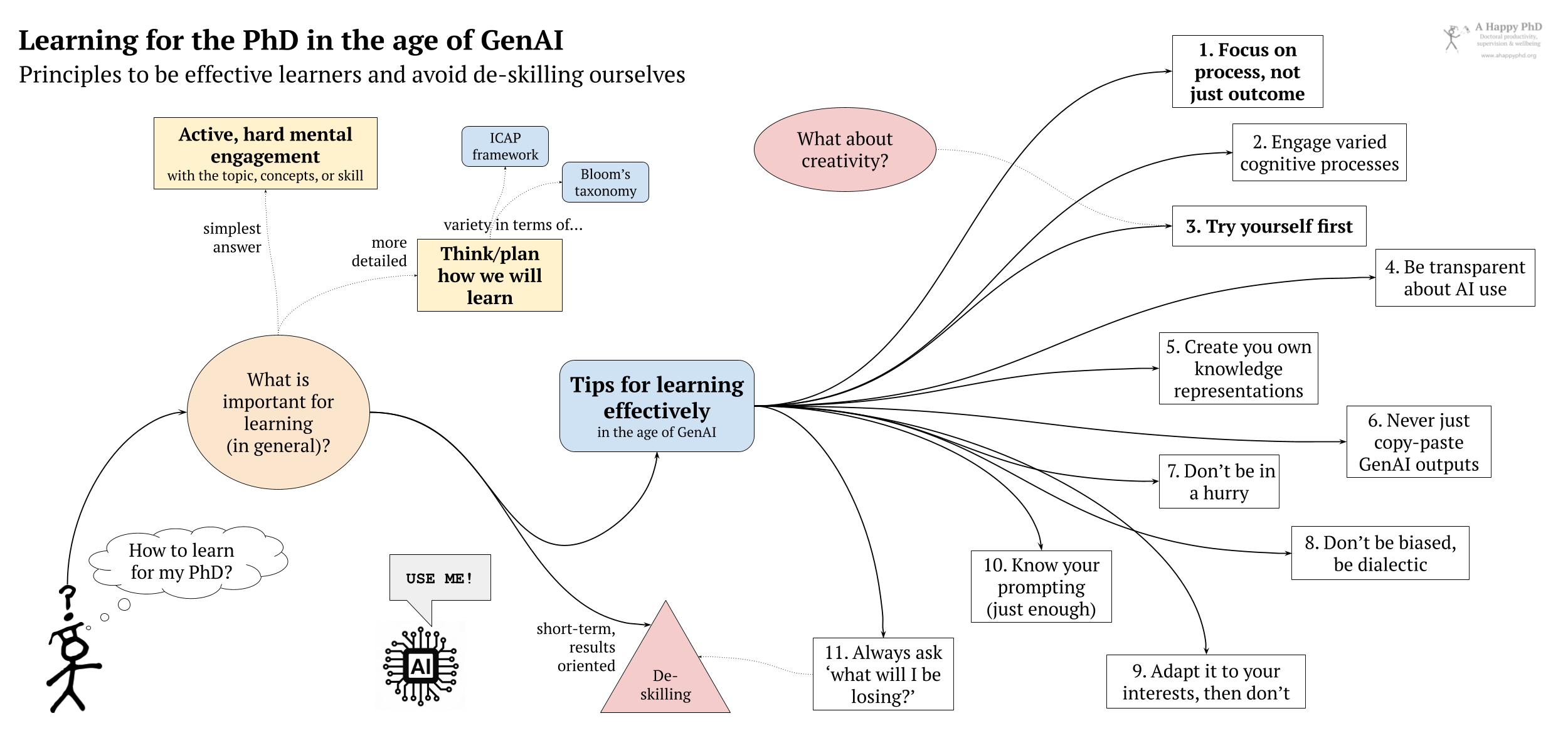

The following diagram summarizes the main ideas of this post:

In closing

Our brains have been shaped by millions of years of evolution to conserve energy. Hence all the shortcuts, biases, heuristics… that make us quirky, almost-logical creatures10. These “cheaper” ways of thinking worked well most of the time (in a paleolithic environment, at least). ChatGPT and similar tools are now the perfect shortcut for many of the complex operations we perform as learners and researchers with that energy-expensive brain. Yet if we want to upgrade our wetware (i.e., learn, which means rewiring our brain and nervous system), we need discernment: which shortcuts help, and which defeat the purpose of learning. I hope this post offered a few heuristics to make that discernment a bit less costly :)

This post has greatly benefitted from discussions with Yannis Dimitriadis (with whom I teach the courses I mention, and who knows far more about learning, research, and AI than me). All mistakes, however, are only mine.

NB: I also used ChatGPT to trim the prose of the post, while keeping all the ideas and terminology. Yes, I had to edit it manually afterwards to add back stuff I consider important, which the AI had “over-trimmed.” Overall, it did not save me time, but I hope it will save you readers hundreds of minutes of collective reading time. Let me know if you’d prefer I don’t do this :)

Header image by ChatGPT5. The prompt/conversation that led to it is too long to post here (it took many tries).

-

How do I know? As many academics, I have now developed a (probably imperfect, but also probably better than chance) “sense of smell” for this. Probably you have seen this yourself in its most blatant forms: grammatically perfect (even if the supposed author has limited English skills), upbeat, a tad too flowery and semantically-fuzzy prose that looks more like marketing copy, rather than the imperfect but thoughtful reflection of a fellow human. ↩︎

-

Yan, L., Greiff, S., Lodge, J. M., & Gašević, D. (2025). Distinguishing performance gains from learning when using generative AI. Nature Reviews Psychology, 4(7), 435–436. https://doi.org/10.1038/s44159-025-00467-5 ↩︎

-

Fan, Y., Tang, L., Le, H., Shen, K., Tan, S., Zhao, Y., Shen, Y., Li, X., & Gašević, D. (2025). Beware of metacognitive laziness: Effects of generative artificial intelligence on learning motivation, processes, and performance. British Journal of Educational Technology, 56(2), 489–530. https://doi.org/10.1111/bjet.13544 ↩︎

-

Chi, M.T., Wylie, R.: The ICAP framework: Linking cognitive engagement to active learning outcomes. Educational psychologist 49(4), 219–243 (2014), https://doi.org/10.1080/00461520.2014.965823 ↩︎

-

This is why the (now apparently defunct due to GenAI) writing of essays was such a staple of higher education: if done well, it forces us to engage at higher levels of complexity. It wasn’t necessarily because our teachers wanted to see us suffer :) ↩︎

-

Notice the little endings in most of these chatbots’ responses (especially, in latter months): is it trying to be helpful by producing an output for us? A little tactic that is great to drive up engagement… but deadly long-term for our constructive skills and our learning. ↩︎

-

Darley, J. M., & Batson, C. D. (1973). “From Jerusalem to Jericho”: A study of situational and dispositional variables in helping behavior. Journal of Personality and Social Psychology, 27(1), 100–108. https://doi.org/10.1037/h0034449 ↩︎

-

Sharma, M., Tong, M., Korbak, T., Duvenaud, D., Askell, A., Bowman, S. R., … & Perez, E. (2023). Towards understanding sycophancy in language models. arXiv preprint arXiv:2310.13548. ↩︎

-

Recent events like the blackout that affected most of Spain and Portugal this Spring reminded me starkly that so much of what we assume will always be there, sometimes is not. Or just go for a walk in some remote mountain area. What skills do we have left, outside the cocoon of our modern digital technologies? ↩︎

-

Kahneman, D. (2011). Thinking, fast and slow. Farrar, Straus and Giroux New York. https://us.macmillan.com/books/9780374533557 ↩︎

Luis P. Prieto

Luis P. is a Ramón y Cajal research fellow at the University of Valladolid (Spain), investigating learning technologies, especially learning analytics. He is also an avid learner about doctoral education and supervision, and he's the main author at the A Happy PhD blog.